Ever since ChatGPT launched in November 2022, the tech-suspicious among us have had one question: at what point is this all going to go wrong? Forget AI replacing actors and writers, at what point will Skynet become conscious, launch the nukes, and wipe humankind off the face of the Earth? It sounds like paranoia fuelled by ‘80s apocalyptic action movies, sure. But even those involved in the development of AI have warned about going too far, too fast.

“These things could get more intelligent than us and could decide to take over, and we need to worry now about how we prevent that happening,” Geoffrey Hinton, the ‘godfather of AI’ warned recently. “I thought for a long time that we were, like, 30 to 50 years away from that…Now, I think we may be much closer, maybe only five years away from that,” he warned, having quit his job at Google in order to sound the alarm. “This isn't just a science fiction problem. This is a serious problem that's probably going to arrive fairly soon, and politicians need to be thinking about what to do about it now,” Hinton concludes.

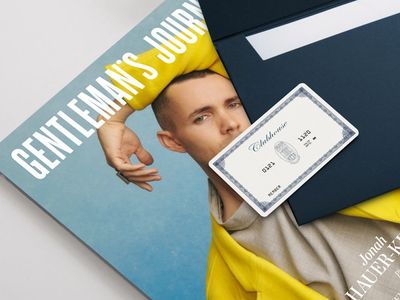

Become a Gentleman’s Journal Member?

Like the Gentleman’s Journal? Why not join the Clubhouse, a special kind of private club where members receive offers and experiences from hand-picked, premium brands. You will also receive invites to exclusive events, the quarterly print magazine delivered directly to your door and your own membership card.